Key Takeaway

- Google May Not Have Indexed Your Site Yet: New websites take time to appear; use Google Search Console to submit and monitor indexing.

- Check for Blockers: Misconfigured robtots.txt, noindex tags, or CMS settings can prevent indexing.

- Fix Technical SEO Issues: Ensure clean site structure, fast loading speeds, mobile-friendliness, and no broken links.

- Create High-Quality Content: Avoid thin or duplicate content—focus on helpful, original content that builds trust.

- Build Backlinks & Authority: Quality external links help Google discover and trust your site faster.

- Use Google’s Tools: Regularly monitor Search Console for indexing, errors, or penalties.

Why Can’t I Find My Website On Google Search Engine?

It’s a moment filled with anticipation: you’ve poured hours, creativity, and maybe even a good chunk of your budget into building a brand new website. You hit “launch,” eager to share your creation with the world. You dash over to Google, type in your site’s name, press Enter, and… crickets… nothing in the search engine. Or perhaps, your shiny new site is languishing on page 10 of the search results, a place few mortals ever venture. That sinking feeling? It’s a common frustration, but don’t despair! It’s rarely a sign that your website is doomed. More often, it’s a puzzle waiting to be solved.

So why is your newly minted website not showing up in Google search engine? Let’s dive into the most common culprits and, more importantly, explore actionable steps you can take to get your website noticed by the world’s largest search engine.

Google Hasn’t Indexed Your Site Yet

This is, by far, the most frequent reason, especially for websites fresh off the digital press. Think of Google as a colossal library, constantly sending out robotic librarians (web crawlers, spiders, or Googlebot) to discover new books (web pages) and add them to its catalog (the Google index). When your site is brand new, it’s like an uncataloged book; it takes time for the librarians to find it, read it, and decide where it fits on the shelves.

The Crawling and Indexing Ballet

Googlebot discovers new content primarily by following links from known sites to new ones or by processing sitemaps you provide. Once found, it “crawls” the page, rendering its content much like a browser does, to understand what it’s about. If deemed valuable and not duplicative, the page is then “indexed” – stored in Google’s massive database, making it eligible to appear in search results.

Patience is a Virtue (But Proactivity Helps!)

The indexing timeframe can range from a few days to several weeks. While patience is essential, you’re not entirely at the mercy of the waiting game. You can proactively help Google find your site by submitting an XML sitemap through Google Search Console (GSC). GSC’s URL Inspection tool also allows you to request indexing for specific important pages, which can sometimes expedite the process.

Crawl Budget – Google’s Resource Allocation

Google doesn’t have infinite resources to crawl every single page on the internet constantly. It allocates a “crawl budget” to each website, which is roughly the number of URLs Googlebot can and wants to crawl. New sites, or sites with few external links, might initially have a lower crawl budget. Improving site speed, internal linking, and content quality can positively influence this over time.

How to Check Your Index Status

The simplest check is to type `site:yourdomain.com` (replacing `yourdomain.com` with your actual domain) into the Google search bar. If results from your site appear, congratulations, you’re in the index! If not, Google either hasn’t found your site yet or has encountered issues preventing indexing.

You’re Blocking Search Engines

Sometimes, the reason Google isn’t listing your site is because you’ve inadvertently told it not to. This often happens with leftover settings from development or misunderstandings about how certain files work.

The `robots.txt` File – Your Website’s Gatekeeper

This simple text file, located at the root of your domain (e.g., `yourdomain.com/robots.txt`), provides instructions to web crawlers. A common culprit is a line like `User-agent: * Disallow: /`, which tells all crawlers not to access any part of your site. This is often used on staging sites to prevent them from being indexed and then accidentally carried over to the live site. Ensure your `robots.txt` allows access to important content. You can also specify the location of your sitemap here (`Sitemap: https://yourdomain.com/sitemap.xml`).

`noindex` Meta Tags or X-Robots-Tag Headers – Page-Level Blockers

A `noindex` directive tells search engines not to include a specific page in their results. This can be implemented as a meta tag in the `<head>` section of a page’s HTML (e.g., `<meta name=’robots’ content=’noindex,follow’ />`) or as an HTTP header (X-Robots-Tag: `noindex`). Developers often use these during site construction and forget to remove them upon launch. Check the source code of your key pages and your server’s HTTP header responses.

Password Protection & Access Control

If your entire site, or significant portions of it, are hidden behind a password login, Google’s crawlers (which don’t have login credentials) won’t be able to access and index that content. This is fine for private member areas, but public-facing content needs to be accessible.

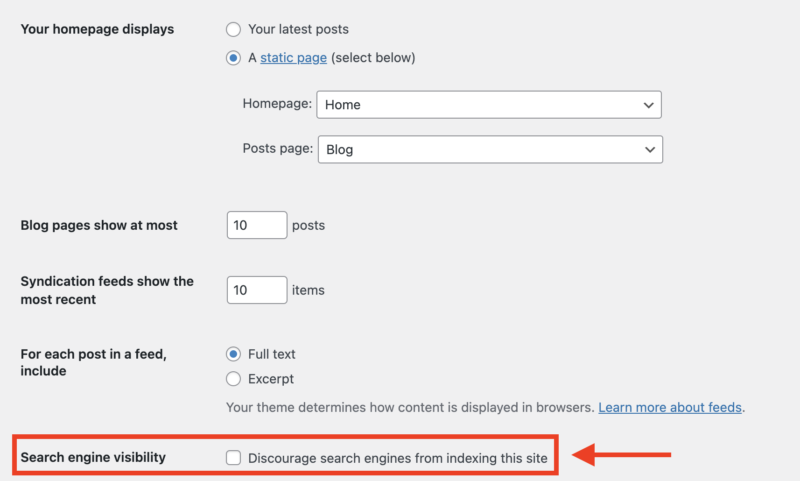

CMS-Specific Settings (e.g., WordPress)

Many Content Management Systems have built-in options to discourage search engine visibility. In WordPress, for instance, there’s a checkbox under Settings > Reading that says, “Discourage search engines from indexing this site.” If this is checked, WordPress adds a `noindex` tag, effectively hiding your site. Always double-check these platform-specific settings and ensure that the checkbox is NOT Checked.

By Checking the box in your WordPress CMS, it prevents Search Engine to crawl and index the entire site.

Technical Issues: When Your Site Isn’t Playing Nice with Google

Beyond explicit blocking, a host of technical issues can throw a wrench in Google’s ability to crawl, render, and index your website effectively.

Poor Site Architecture & Internal Linking – The Confusing Roadmap

If your site has a convoluted navigation structure, if important pages are buried deep within many clicks, or if pages aren’t logically linked to each other (orphaned pages), Googlebot might struggle to discover all your content. A clear, hierarchical structure with intuitive internal linking helps crawlers understand the relationships between your pages and distribute link equity.

Server Health & HTTP Status Codes – Is Your Site Actually Reachable?

Frequent server errors (like 5xx errors, indicating server-side problems) or a consistently down server means Googlebot can’t access your pages. Similarly, an abundance of 404 (Not Found) errors for important URLs without proper redirects (301 for permanently moved content) can signal a poorly maintained site. Reliable web hosting and when to use 301 redirection is crucial.

Slow Loading Speed – Don’t Keep Google (or Users) Waiting

While not a direct cause for ‘not’ being indexed, an extremely slow website can exhaust Google’s crawl budget for your site before it gets to all your pages. More importantly, page speed is a ranking factor and critical for user experience. Google’s Core Web Vitals (Largest Contentful Paint, First Input Delay, Cumulative Layout Shift) are metrics to watch. Tools like Google PageSpeed Insights can help you diagnose speed issues.

Mobile-Friendliness – The Mobile-First Era

Google predominantly uses the mobile version of your content for indexing and ranking (this is called mobile-first indexing). If your site isn’t mobile-friendly—meaning it’s hard to use on a smartphone, text is too small, links are too close together, or content is wider than the screen—it will significantly harm your indexing and ranking potential. Responsive design is the standard here.

JavaScript & SEO – A Complex Dance

Modern websites heavily rely on JavaScript to create dynamic and interactive experiences. While Googlebot has become much better at rendering and understanding JavaScript, issues can still arise. If critical content or links are only loaded via JavaScript after complex user interactions, or if client-side rendering is poorly implemented, Google might miss important information. Consider server-side rendering (SSR) or dynamic rendering for JavaScript-heavy sites to ensure content is easily accessible to crawlers.

Potential Content Quality

Google’s primary goal is to provide users with high-quality content, relevant, and trustworthy search results. If your website’s content doesn’t meet these criteria, it may struggle to get indexed or rank well in the search engine. Ensure that your content is useful or helpful to satisfy users’ search queries.

Lack of Quality or “Thin” Content

If your pages have very little unique text, offer no real value, or are stuffed with keywords without substance, Google might deem them “thin content.” This can include pages with mostly images and little descriptive text, auto-generated content, or doorway pages created solely to funnel users elsewhere. Google wants to see content that demonstrates E-E-A-T (Experience, Expertise, Authoritativeness, and Trustworthiness).

Duplicate Content Issues

Presenting the same or very similar content on multiple URLs (either within your own site or copied from other websites) can confuse search engines and dilute your ranking signals. This can lead to Google choosing not to index some of the duplicate versions. Use canonical tags (`rel=”canonical”`) to indicate your preferred version of a page if similar content must exist on multiple URLs.

Spammy Practices or Policy Violations

If your site engages in practices that violate Google’s webmaster guidelines (e.g., cloaking, hidden text, aggressive keyword stuffing, participation in link schemes), it might not only fail to get indexed but could also face manual penalties.

No Inbound Links (Backlinks)

Google discovers a significant portion of new web content by following links from existing, known websites. If your brand new website has zero external links pointing to it (backlinks), it’s like an isolated island that’s harder for Google’s discovery ships to find. While Google ‘can’ find sites through sitemap submissions and direct requests in Google Search Console, backlinks act as endorsements and pathways, often speeding up discovery and signaling importance.

Quality Over Quantity

Not all backlinks are created equal. A link from a reputable, relevant website carries far more weight than dozens of links from low-quality or spammy sites. Focus on earning natural, high-quality backlinks over time.

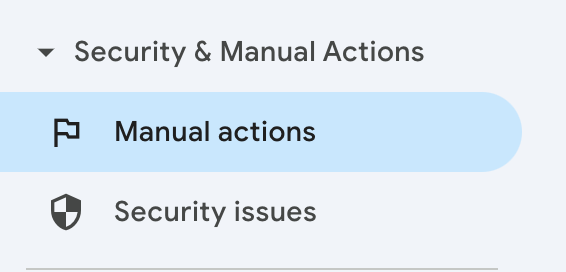

Manual Actions or Security Issues

Google Search Console tools can help identify issues that could potentially prevent the site from performing.

In more severe cases, your site might be invisible or buried due to direct intervention from Google or because it’s compromised.

Manual Actions

If Google’s human reviewers find that your site significantly violates their webmaster guidelines, they can apply a manual action (a penalty). This can lead to some or all of your site being demoted in rankings or even worse, removed from the index entirely. You can check for manual actions in Google Search Console.

Security Issues

If your site has been hacked, infected with malware, or is involved in phishing schemes, Google will likely flag it as unsafe and remove it from search results to protect users. Google Search Console will also report these security issues.

Steps to Get Your Website on Google’s Radar: Action Plan

Feeling overwhelmed? Don’t be! Here’s a practical checklist to troubleshoot and improve your site’s visibility:

1. Set Up and Master Google Search Console (GSC)

This free tool is your direct line of communication with Google. Verify your site, submit your XML sitemap, and regularly monitor Google Search Console for:

- Coverage Reports: See which pages are indexed, which have errors or warnings, and why.

- URL Inspection Tool: Submit individual URLs for indexing, see how Googlebot views your page, and test live URLs.

- Sitemaps: Ensure your sitemap is submitted and processed without errors.

- Manual Actions & Security Issues: Check these sections for any critical alerts.

2. Thoroughly Audit `robots.txt` and Meta Tags

Double-check your `robots.txt` file for any unintended `Disallow` directives. Inspect the HTML source of your key pages for `noindex` meta tags. Use GSC’s `robots.txt` Tester.

3. Craft High-Quality, E-E-A-T Content

Focus on creating original, valuable, and in-depth content that genuinely helps your target audience and showcases your experience, expertise, authoritativeness, and trustworthiness (E-E-A-T). Address user intent and conduct keyword research to understand what your audience is searching for.

4. Optimize Site Structure, Navigation, and Internal Linking

Ensure your site is easy for both users and search engines to navigate. Create a logical hierarchy and link relevant pages together using descriptive anchor text. An HTML sitemap can also be beneficial for users.

5. Prioritize Mobile-Friendliness and Page Speed

Test your site using Google’s Mobile-Friendly Test and PageSpeed Insights. Address any issues to ensure a smooth experience on all devices and fast loading times.

6. Build a Healthy Backlink Profile (Ethically)

Start by creating amazing content that others will want to link to. Consider guest blogging on relevant, reputable sites, participating in industry communities, and ensuring your business is listed in relevant local directories if applicable. Creating social media profiles and linking to your site can be an initial step.

7. Ensure Your Site is Secure (HTTPS)

Use HTTPS (SSL certificate) to encrypt data between your site and its visitors. Keep your CMS, plugins, and themes updated to patch security vulnerabilities.

8. Be Patient and Persistent

SEO is a marathon, not a sprint, especially for new websites. It can take weeks, or even months, for a new site to gain traction and visibility. Consistently apply best practices, monitor your progress, and adapt your strategy as needed.

Seeking Expert Help: When to Call in the SEO Cavalry

While many initial indexing issues can be resolved with the steps above, there are times when bringing in an SEO professional or agency is a wise investment:

- You’ve Tried Everything: If you’ve diligently worked through the common issues and your site still isn’t appearing after a reasonable period (a couple of months), an expert eye might spot deeper technical problems or strategic gaps.

- Lack of Time or In-House Expertise: SEO is a specialised and ever-evolving field. If you or your team lack the time or deep knowledge to implement and manage an effective SEO strategy, professionals can bridge that gap.

- Complex Technical SEO Challenges: Issues related to JavaScript rendering, international SEO (hreflang), complex site migrations, or recovering from penalties often require advanced expertise.

- Highly Competitive Niches: If you’re in a market where competitors have strong, established SEO, an expert can help you devise a strategy to gain a foothold.

When looking for an SEO expert, seek transparency, a proven track record (case studies, testimonials), and a commitment to ethical, white-hat practices. Avoid anyone promising guaranteed #1 rankings or using shady tactics.

Navigating Your Way into Google’s Good Graces

Launching a new website is an exciting milestone, and the initial invisibility in Google can be disheartening. However, as we’ve explored, the reasons are often identifiable and, more importantly, fixable. It’s rarely a single issue but rather a combination of factors, from Google needing time to discover your site, to accidental technical blockades or the need for more robust content and authority signals.

Think of getting your site indexed and ranked as building a relationship with Google. You need to introduce yourself properly (sitemaps, Google Search Console), ensure your communication is clear (no `robots.txt` or `noindex` barriers), present yourself well (quality content, good user experience, fast and mobile-friendly site), and build credibility in your neighborhood (quality backlinks).

Overcoming the Hurdles & Finding Your Audience

The journey to Google visibility requires a methodical approach. Start with the basics: ensure Google ‘can’ find and crawl your site. Then, focus on providing value to your users through excellent content and a seamless experience. SEO isn’t a one-time task; it’s an ongoing process of creation, optimization, and adaptation.

If you encounter persistent issues, don’t be afraid to seek knowledge or professional help. The digital landscape is vast, but with the right strategies, your new website can move from being a hidden gem to a discoverable destination.

Helpful Resources & Services to Consider

Google’s Own Tools (Essential & Free):

| Google Search Console: | Your primary diagnostic and communication tool with Google |

| Google Analytics: | To understand your website traffic and user behavior |

| Google PageSpeed Insights: | For testing and improving site speed |

| Google Mobile-Friendly Test: | To check your site’s mobile usability. |

| Google Search Central Blog & Documentation: | A wealth of official information and best practices. |

Comprehensive SEO Platforms (Subscription-based, often with free trials/limited versions):

| Ahrefs | Excellent for backlink analysis, keyword research, site audits, and competitor research. |

| Semrush | Another powerful all-in-one SEO suite with similar features to Ahrefs. |

| Moz Pro | Offers tools for keyword research, link building, site audits, and rank tracking. |

Conclusion

By patiently and systematically addressing these potential roadblocks, and by committing to creating a valuable online presence, you’ll significantly increase the chances of your newly built website not just being found in Google, but thriving there.

Want to stand out online with a stunning, high-performing website? Partner with a reputable Web Developer Company like Optimum Impact to boost your search engine visibility and turn clicks into customers. Book your free consultation today!